If you are small business, it can be really hard to stay on top of social media. You could hire someone, but the decision is often a trade off between time and money.

With additional pressure being applied by tariffs, small business owners need to get more out of the channels they have available to them . . . and social is a very important one.

With time constraints, there is a lot of pressure to post the same content on every channel. Although using the same visuals on different channels is sometimes needed because of cost of time, writing content geared toward your audience on each channel is a great use of AI.

By using ChatGPT and giving it specific instructions, you can generation a couple of options that are tailored to the platform and the content you are trying to promote.

When you create content for social platforms, you can tailor them for the type of format and audience. This is a very general prompt. If you have a target within the platform – SEO professionals in my case – then you can add that to the information by including that detail in the prompt.

I ask for multiple options and both short and long formats. Why? It helps to generate different versions to get one that is the closest to what you are trying to communicate.

If you have a PRO version, you can do it in a project that has your brand guide. Don’t have a brand guide, just put a few sentences in a word document to describe yourself and use it for a consistent reference.

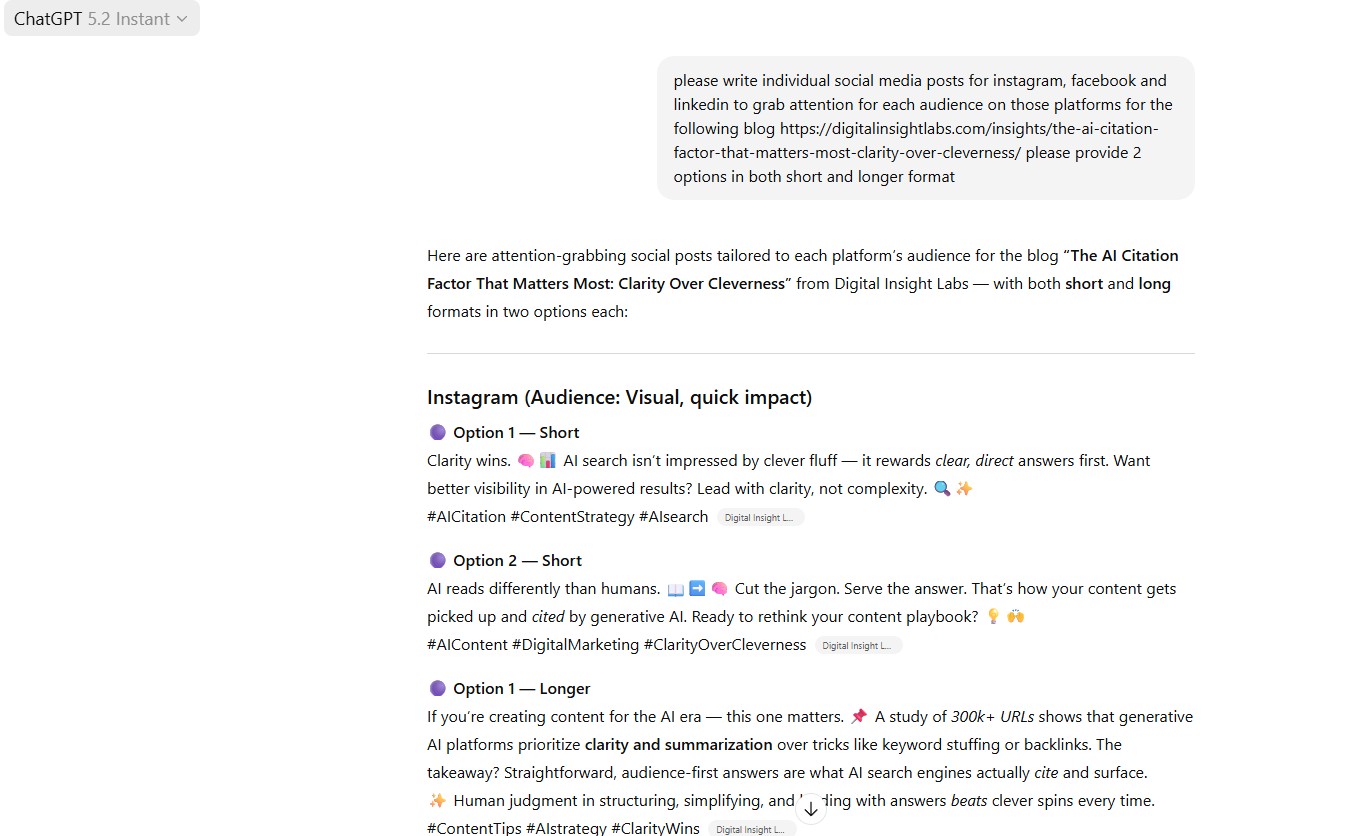

THE PROMPT

please write individual social media posts for instagram, facebook and linkedin to grab attention for each audience on those platforms for the following blog [URL]. please provide 2 options in both short and longer format.

THE RESULT

Here are attention-grabbing social posts tailored to each platform’s audience for the blog “The AI Citation Factor That Matters Most: Clarity Over Cleverness” from Digital Insight Labs — with both short and long formats in two options each:

Instagram (Audience: Visual, quick impact)

🟣 Option 1 — Short

Clarity wins. 🧠📊 AI search isn’t impressed by clever fluff — it rewards clear, direct answers first. Want better visibility in AI-powered results? Lead with clarity, not complexity. 🔍✨

#AICitation #ContentStrategy #AIsearch

🟣 Option 2 — Short

AI reads differently than humans. 📖➡️🧠 Cut the jargon. Serve the answer. That’s how your content gets picked up and cited by generative AI. Ready to rethink your content playbook? 💡🙌

#AIContent #DigitalMarketing #ClarityOverCleverness

🟣 Option 1 — Longer

If you’re creating content for the AI era — this one matters. 📌 A study of 300k+ URLs shows that generative AI platforms prioritize clarity and summarization over tricks like keyword stuffing or backlinks. The takeaway? Straightforward, audience-first answers are what AI search engines actually cite and surface.

✨ Human judgment in structuring, simplifying, and leading with answers beats clever spins every time.

#ContentTips #AIstrategy #ClarityWins

🟣 Option 2 — Longer

Stop writing for robots and start writing for understanding. 🤝 New data proves generative AI isn’t after clever language — it’s after clarity. Content that explains what the reader needs in the fewest mental hops wins in AI search. This isn’t SEO vs AI — it’s humanity + clarity vs noise.

Lead with answers. Respect the reader’s time. Let AI discover you for the right reasons. 🚀

#ContentCreation #AIvisibility #MarketingInsights

Facebook (Audience: Engaged, discussion-oriented)

🔵 Option 1 — Short

Why are some articles actually referenced by AI search tools? It comes down to clarity over cleverness. Simple, structured, answer-first content outperforms flashy language — even if it’s SEO optimized. Clarity is the new edge. 📈

Read more ↓

🔵 Option 2 — Short

If AI is part of your content distribution strategy — your best play might be to trim the fluff and sharpen the answers. The data says clarity correlates with AI citations more than backlinks, keywords, or fancy copy. 🤔

Want the full scoop? 📖

🔵 Option 1 — Longer

In the age of generative AI, content that answers first, then explains next, gets noticed — not the work with dense language or crafty SEO tricks. A massive analysis of AI citations shows that clarity and summarization are the strongest predictors of AI visibility.

The implication: your editorial thinking — not just your tech stack — is what will get you discovered.

Read the full post and rethink how you structure content for AI audiences. 🔗

🔵 Option 2 — Longer

AI search platforms are rewriting the rules of relevance. Traditional SEO signals still matter, but when it comes to AI citations, the leading factors are clarity, structure, and Q&A-style formatting. That means ditching ambiguity and leading with the answer your audience is actually seeking.

When AI prioritizes comprehension over cleverness, human editorial judgment becomes a major advantage.

Dive into the insights and adjust how you write for discovery in 2026 and beyond. 📘

LinkedIn (Audience: Professional, strategic focus)

🟢 Option 1 — Short

New research on AI discovery shows something counter-intuitive: clear, direct responses beat clever language for AI citations. If you’re writing for AI search visibility, structure and clarity are your competitive differentiators. 🔍📊

#ContentStrategy #AIsearch #ThoughtLeadership

🟢 Option 2 — Short

In a landscape where AI influences visibility, answer clarity matters more than keyword density or backlinks. Leadership content that’s easy to parse and structured wins in AI-powered results.

Rethink how you convey value — with precision. 💡

#MarketingLeadership #AI #ContentOps

🟢 Option 1 — Longer

If your team is investing in content for AI search, this insight should shift how you work. The data from a large-scale AI optimization study shows clarity and summarization as the strongest signals correlated with AI citations — outpacing traditional SEO metrics like backlinks.

That elevates the role of editorial strategy: deciding what the audience needs to know first and delivering it with simplicity and structure.

It’s not AI vs humans — it’s human clarity helping AI surface better answers.

#StrategicContent #AIecosystem #DigitalGrowth

🟢 Option 2 — Longer

AI platforms may be transforming search, but the core of meaningful content hasn’t changed: serve the audience with clarity. The most cited content in generative AI isn’t the most verbose or keyword-stuffed — it’s the content that gets straight to the point and anticipates audience needs.

For content leaders, the strategic imperative now includes:

✔ answer-first structure

✔ clear summaries

✔ reduced cognitive load

This is where editorial judgment creates competitive advantage in AI visibility.

#ContentLeadership #AIstrategy #BusinessGrowth